IEEE Transactions on Visualization and Computer Graphics (TVCG)

Outdoor Markerless Motion Capture with Sparse Handheld Video Cameras

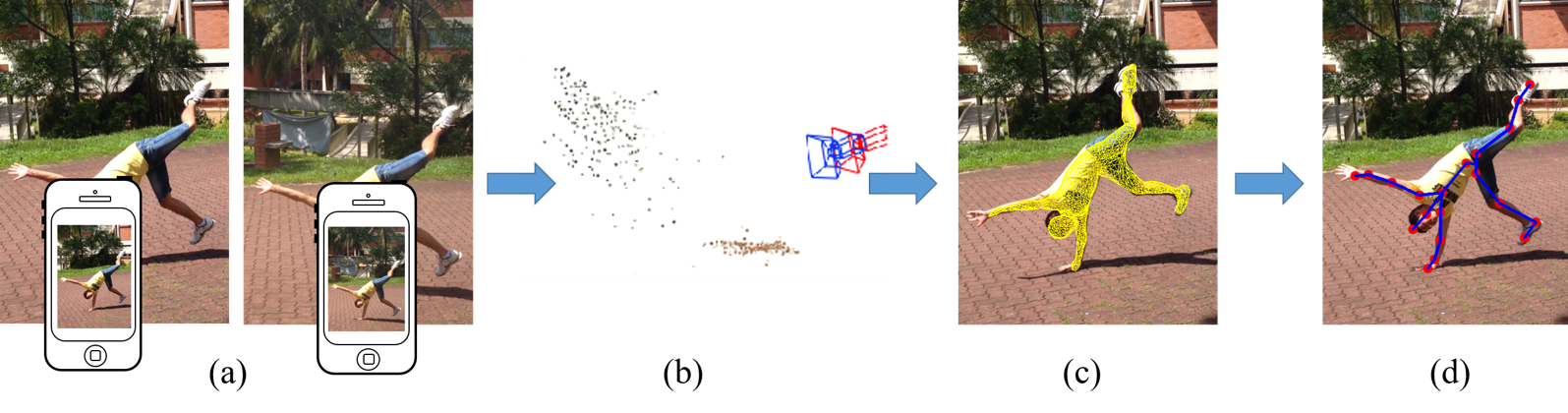

System pipeline.

(a) Our system takes multiple synchronized videos captured by handheld cameras as input. (b) We adopt the CoSLAM method to calibrate all cameras in 3D space. (c) Our motion tracking system estimates the skeleton motion with a sample-based method. (d) We use a gradient-based method to refine the skeleton pose.Abstract

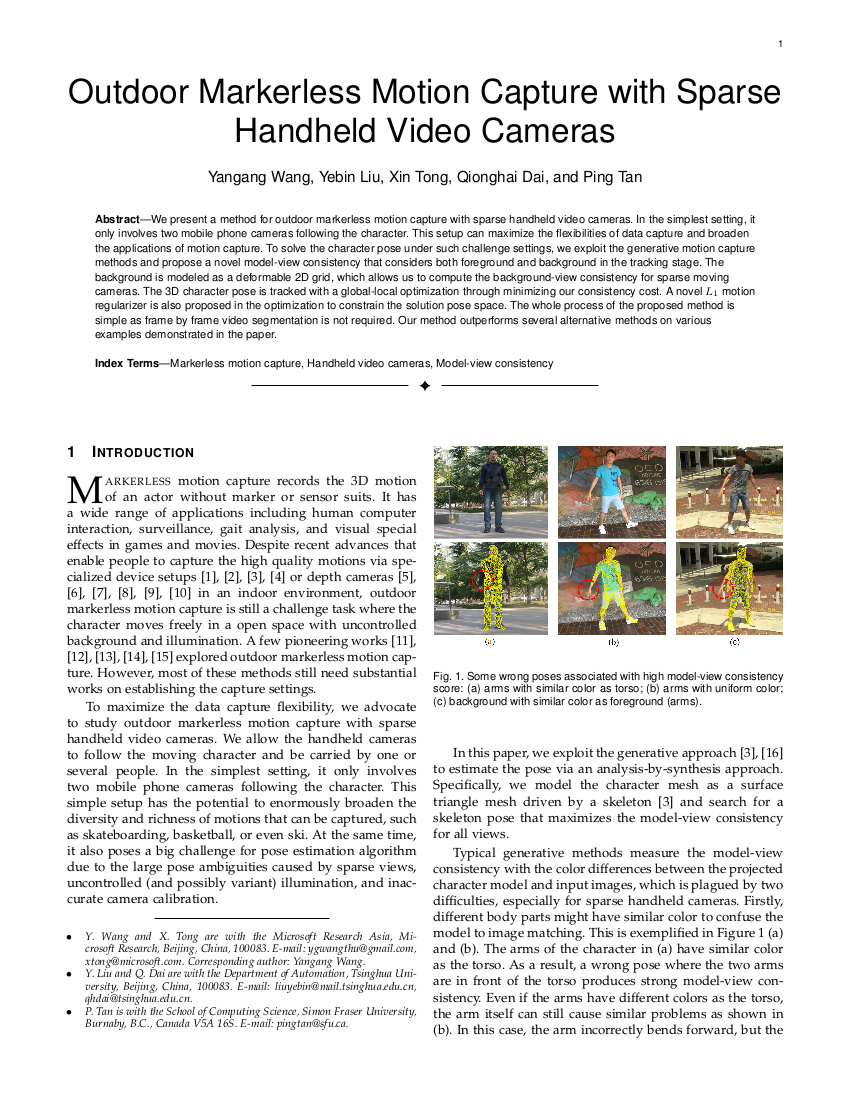

We present a method for outdoor markerless motion capture with sparse handheld video cameras. In the simplest setting, it only involves two mobile phone cameras following the character. This setup can maximize the flexibilities of data capture and broaden the applications of motion capture. To solve the character pose under such challenge settings, we exploit the generative motion capture methods and propose a novel model-view consistency that considers both foreground and background in the tracking stage. The background is modeled as a deformable 2D grid, which allows us to compute the background-view consistency for sparse moving cameras. The 3D character pose is tracked with a global-local optimization through minimizing our consistency cost. A novel L1 motion regularizer is also proposed in the optimization to constrain the solution pose space. The whole process of the proposed method is simple as frame by frame video segmentation is not required. Our method outperforms several alternative methods on various examples demonstrated in the paper.

Results

Materials

Related links

|

|

Related Work

Outdoor markerless motion capture has the potential to enormously broaden the diversity and richness of motions that can be captured. Check out some related work below:

- Markerless Motion Capture with Unsynchronized Moving Cameras

By Hasler et al. (CVPR2009) - Outdoor Human Motion Capture using Inverse Kinematics and von Mises-Fisher Sampling

By Pons-Moll et al. (ICCV2011) - Efficient ConvNet-based Marker-less Motion Capture in General Scenes with a Low Number of Cameras

By Elhayek et al. (CVPR2015) - Model-based Outdoor Performance Capture

By Robertini et al. (3DV2016)

Reference

Yangang Wang, Yebin Liu, Xin Tong, Qionghai Dai and Ping Tan. "Outdoor Markerless Motion Capture with Sparse Handheld Video Cameras". IEEE Transactions on Visualization and Computer Graphics, 24(5):1856-1866, 2018.

Acknowledgments: We would like thank Kaimo Lin to help us to capture the majority of data. This work was supported in part by the National Key Foundation for Exploring Scientific Instrument No.2013YQ140517, the National Science Foundation of China (NSFC) No.61522111 and No.61531014.